Welcome to the world of Docker, where containerization transforms the way we develop, ship, and manage applications.

In this beginner-friendly post, we’ll embark on a journey to understand the fundamental concepts of Docker, setting up Docker on different platforms, and exploring its real-world applications. By the end of this session, you’ll have a solid grasp of what Docker is and how it can revolutionize your software development process.

Understanding Containerization

Containerization is like magic for software developers. It allows you to package an application and its dependencies into a single unit, known as a container. Think of it as a self-contained box that holds everything your app needs to run smoothly, from libraries to system tools. Containers are lightweight, efficient, and highly portable.

A container is

- an isolated runtime inside of Linux

- provides a private space under Linux

- run under any modern Linux kernel

his container has

- his own process space

- his own network interface

- his own disk space

Run as root (inside the container)

Docker vs. Traditional Virtualization

Before Docker, virtualization was the go-to method for running multiple applications on a single server. However, it had its drawbacks. Virtual machines (VMs) are resource-intensive and slower to start. Docker containers, on the other hand, are much more lightweight, as they share the host OS kernel, making them faster and more efficient.

Use Cases for Docker

Docker is incredibly versatile and finds applications across various industries. It’s used for software development, testing, and production deployments. Whether you’re a developer, system administrator, or data scientist, Docker can streamline your workflow and simplify application management.

Setting up Docker

Installing Docker on Various Platforms

Getting started with Docker is easy, regardless of your operating system. Docker provides installation packages for Windows, macOS, and Linux. It’s a matter of a few clicks or commands to have Docker up and running on your machine.

To install Docker on your machin check this post

Exploring Docker Desktop (for Windows and macOS)

For Windows and macOS users, Docker Desktop is a user-friendly tool that simplifies container management. It provides a graphical interface to manage containers, images, and more. It’s a great starting point for those new to Docker.

Docker Versioning and Compatibility

Docker evolves rapidly, with frequent updates and new features. It’s important to understand Docker’s versioning and compatibility matrix to ensure that your containers work seamlessly across different environments.

Docker terminology

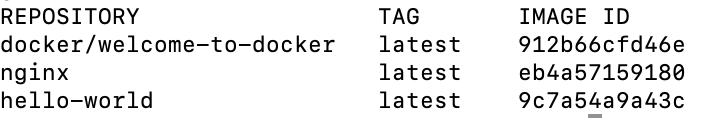

Docker Image, representation of a docker container. For instance like a JAR or WAR.

Docker Container, the runtime of Docker. Basically a deployed and running docker image, the instance of the docker image.

Docker Engine, the code which manages Docker stuff. Creates and runs Docker containers

Docker Editions

Docker Enterprise and Community editions

Docker Enterprise, is a Caas (Container as a Service) platform subscription (payed)

Docker Community is a free Docker edition

Question 1: What is containerization?

a) A process of shipping physical containers with software inside

b) A way to package applications and their dependencies

c) A type of virtual machine

d) A tool for managing servers

Correct answer: b) A way to package applications and their dependencies

Question 2: What is a key advantage of Docker containers over traditional virtual machines?

a) Docker containers are more secure

b) Docker containers are larger in size

c) Docker containers share the host OS kernel

d) Docker containers have a GUI

Correct answer: c) Docker containers share the host OS kernel

Question 3: Which of the following is NOT a common use case for Docker?

a) Simplifying application deployment

b) Creating virtual machines

c) Building and testing applications

d) Scaling applications on-demand

Correct answer: b) Creating virtual machines

Question 4: Which platforms does Docker provide installation packages for?

a) Windows, macOS, Linux

b) Windows only

c) macOS only

d) Linux only

Correct answer: a) Windows, macOS, Linux

Question 5: What is Docker Desktop primarily used for?

a) Creating virtual machines

b) Managing Docker containers and images

c) Writing code

d) Playing games

Correct answer: b) Managing Docker containers and images

Question 6: Why is it essential to be aware of Docker’s versioning and compatibility?

a) To ensure your containers work consistently across environments

b) To track the stock market

c) To play the latest video games

d) To learn about new Docker features

Correct answer: a) To ensure your containers work consistently across environments